Recently, language models have become pretty popular in businesses. In fact, a recent survey found that 60% of tech leaders said their budgets for AI language tech increased by at least 10% in 2020.

There are a few different types of language models, however. If you've looked at the title, you might've gotten a bit of a spoiler. General-purpose models like OpenAI's GPT-3, which tend to be large and dominant, are emerging as the most popular choice.

There are also a number of models which are fine-tuned for particular tasks, and a third group which tends to be highly compressed in size and limited to a few capabilities. These are designed to specifically run on Internet of Things devices and workstations.

But what are large language models? Well, these are models which predict what word comes next. Simple as. An LLM provides the probability of a certain word sequence that resembles a human writer.

LLMs are basically probabilistic representations of language, which are built using large neural networks that consider the context of words and improve on word embeddings.

"But LXA", you might be saying, "how can an AI write if it can't even hold a pen?" Well, let's take a look, dear reader.

So, what specifically are Large Language models?

LLM are tens of gigabytes in size and are trained on a huge amount of text data. Sometimes they even get into the petabyte scale.

“Large models are used for zero-shot scenarios or few-shot scenarios where little domain-[tailored] training data is available and usually work okay generating something based on a few prompts,” Fangzheng Xu, a PhD student at Carnegie Mellon specializing in natural language processing, told TechCrunch. In machine learning, the term 'few-shot' refers to the practice of training a model with minimal data, whereas 'zero-shot; is a model that can learn to recognise things it hasn't seen during training.

“A single large model could potentially enable many downstream tasks with little training data,” Xu continued.

In July 2020, OpenAI unveiled GPT-3, the largest of its kind at the time. Model developers and early users found that it was pretty darn clever, and was able to write convincing essays, create charts and websites from text descriptions, and even generate computer code. This was all done with limited to no supervision.

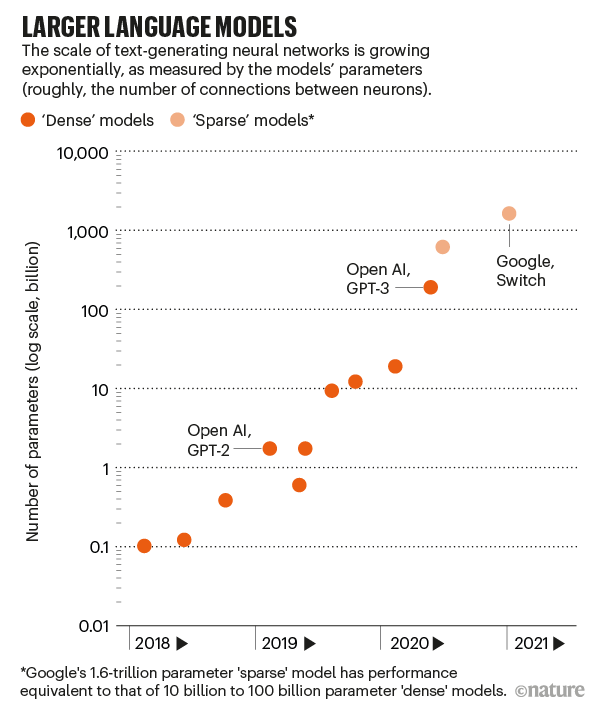

GPT-3 has 175B parameters and was trained on 570 gigabytes of text. A parameter refers to a value the model can change independently as it learns. For comparison, its predecessor GPT-2 was over 100x smaller, at 1.5B parameters.

This increase drastically changes the behaviour of the model, so the new edition can perform tasks it was not explicitly trained in, such as translating sentences from English to French. GPT-3 even outperforms models that were explicitly trained to solve these tasks.

What Can LLMs Do?

Right, I know you're here to find out whether your content team is safe.

Though inferring word probabilities from context, LLMs can build an abstract understanding of natural language, which can be used for a bunch of different tasks.

Natural Language Processing focuses on generating natural human language text. So, improvements in this technology have made it possible for LLMs to be trained to generate realistic human text by machines. Using this, LLMs have been able to create articles, poetry, stories, news reports, and dialogue using just a small amount of input text.

GPT-3 absorbs patterns wherever it can. This means it can recognise grammar, essay structure, and even writing genre.

It also excels at tailoring its response to the style and content of its input text, described as 'prompt programming'. “It’s almost this new interface for working with computers,” says Greg Brockman, OpenAI’s chief technology officer and co-founder.

To show off the power of the model, poet Andrew Brown gave the programme this prompt:

“The poetry assignment was this: Write a poem from the point of view of a cloud looking down on two warring cities. The clever student poet turned in the following rhyming poem:”

GPT-3 responded:

“I think I’ll start to rain,

Because I don’t think I can stand the pain,

Of seeing you two,

Fighting like you do.”

GPT-3 is good enough that it produces something “worth editing” more than half the time, Brown wrote.

Take this short story for example:

SYSTEM PROMPT (HUMAN-WRITTEN)

In a shocking finding, scientist discovered a herd of unicorns living in a remote, previously unexplored valley, in the Andes Mountains. Even more surprising to the researchers was the fact that the unicorns spoke perfect English.

MODEL COMPLETION (MACHINE-WRITTEN, 10 TRIES)

The scientist named the population, after their distinctive horn, Ovid’s Unicorn. These four-horned, silver-white unicorns were previously unknown to science.

Now, after almost two centuries, the mystery of what sparked this odd phenomenon is finally solved.

Dr. Jorge Pérez, an evolutionary biologist from the University of La Paz, and several companions, were exploring the Andes Mountains when they found a small valley, with no other animals or humans. Pérez noticed that the valley had what appeared to be a natural fountain, surrounded by two peaks of rock and silver snow.

Pérez and the others then ventured further into the valley. “By the time we reached the top of one peak, the water looked blue, with some crystals on top,” said Pérez.

Pérez and his friends were astonished to see the unicorn herd. These creatures could be seen from the air without having to move too much to see them – they were so close they could touch their horns.

While examining these bizarre creatures the scientists discovered that the creatures also spoke some fairly regular English. Pérez stated, “We can see, for example, that they have a common ‘language,’ something like a dialect or dialectic.”

Dr. Pérez believes that the unicorns may have originated in Argentina, where the animals were believed to be descendants of a lost race of people who lived there before the arrival of humans in those parts of South America.

While their origins are still unclear, some believe that perhaps the creatures were created when a human and a unicorn met each other in a time before human civilization. According to Pérez, “In South America, such incidents seem to be quite common.”

However, Pérez also pointed out that it is likely that the only way of knowing for sure if unicorns are indeed the descendants of a lost alien race is through DNA. “But they seem to be able to communicate in English quite well, which I believe is a sign of evolution, or at least a change in social organization,” said the scientist.

Large Language Models and Content Marketing Careers

But note that it has taken 10 tries to get to this point, with a human working with the machine to get the exact prompt needed. This is where the human aspect comes in - the models, for writing and art, always need a well-trained individual who knows how to work the algorithm.

Right, down to the nitty gritty. How's this all going to impact us, the hard working marketers and content writers? Well, this is only the beginning of marketing AI.

Artificial intelligence might not just automate or augment certain marketing activities, it can also alter how marketing channels work, and which skillsets are required to thrive in the near future.

This may come as part of the shift into Industry 5.0.

Industry 5.0 is a new production model which focuses on the cooperation between humans and machines. It stands for the recognition that technological advances and human insight and creativity are equally important.

But what makes it 5.0? The previous tier, industry 4.0, emerged with the arrival of automation technologies, IoT, and the smart factory. These advancements have seen the emergence of the digital industry, which have generated a new type of technology that can offer companies data-based knowledge.

Industry 5.0 comes next, involving the leveraging the collaboration between high-tech machinery and tools, and the innovation and agility of human beings.

So, the skills valued in marketers will change when AI systems are able to automatically optimise search and paid campaigns. As the technology is able to generate insightful reports, the type of analysis required of, and valued by, marketers will be different.

Plus, if AI is able to produce simple, short content, content marketers need to develop different content. Think fewer social posts, more opinion-based pieces.

So, we all know marketing skills change with the times. But with marketing AI, these skills will change faster than in the past. This doesn't mean humans will lose their advantage in the industry. Instead, they will have to identify the spaces where AI is weak, and where human creativity and agility are indispensable.

AI and Visual Art Creation

From the Demogorgon from Stranger Things holding a basketball to a man withdrawing a stack of fish from an ATM, people have been making their wildest dreams a reality.

Primarily using Craiyon, a mini version of DALL-E, users have been creating around 5M AI-generated images per day. This is according to Boris Dayma, the creator of Craiyon.

Boris says he has even heard from people using Craiyon to come up with a logo for a new business and to generate imagery in videos.

This shows the potential for this tech to be used beyond memes, and automate and streamline content creation. Imagine the hyper-specific feature images on blog articles. A group of happy, diverse colleagues high-fiving...on the moon, please.

So, it calls into question what this AI will mean for the future of visual content creation. We've already explored what this might mean for written content, but the artists and graphic designers always thought they were sitting out of the splash zone. Well, here comes Shamu.

But all of this is still a work in progress. OpenAI has only just released its tech to a limited group. The beta, launched the other day, will make DALL-E available to 1M people on the waitlist.

On the other hand, Google's Imagen AI image generator remains behind closed doors.

This might be due to the unpolished nature of the tech, with any failings leading to outcomes which reinforce stereotypes and biases. Remember Microsoft's, AI Chatbot Tay?

“We share people’s concerns about misuse, and it’s something that we take really seriously,” OpenAI researcher Mark Chen said.

The firm also seems to be covertly modifying Dall-e 2 requests in an attempt to make it seem less racially and gender biased. Users have discovered that keywords such as “black” or “female” are being added to the prompts given.